Benefits & Risks of Artificial Intelligence

In this article the Max Tegmark, President of the Future of Life Institute, explained his concern about Benefits & Risks of Artificial Intelligence.

Benefits & Risks of Artificial Intelligence

How can AI be dangerous?

- The AI is programmed to do something devastating: Autonomous weapons are artificial intelligence systems that are programmed to kill. In the hands of the wrong person, these weapons could easily cause mass . Moreover, an AI arms race could inadvertently lead to an AI war that also results in mass casualties.

- The AI is programmed to do something beneficial, but it develops a destructive method for achieving its goal: This can happen whenever we fail to fully align the AI’s goals with ours, which is strikingly difficult. If you ask an obedient intelligent car to take you to the airport as fast as possible.

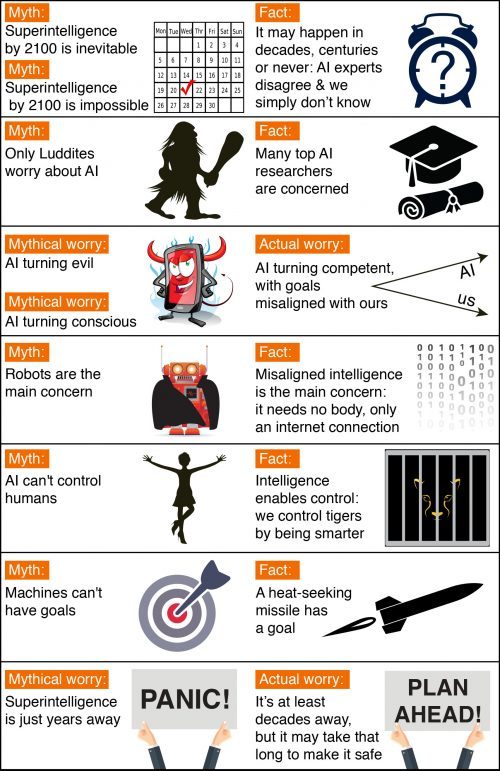

The top myths about AI’s info-graph is interesting.

A captivating conversation is taking place about the future of artificial intelligence and what it will/should mean for humanity. There are fascinating controversies where the world’s leading experts disagree, such as: AI’s future impact on the job market; if/when human-level AI will be developed; whether this will lead to an intelligence explosion; and whether this is something we should welcome or fear. But there are also many examples of of boring pseudo-controversies caused by people misunderstanding and talking past each other. To help ourselves focus on the interesting controversies and open questions — and not on the misunderstandings — let’s clear up some of the most common myths. (Read more)

Myths About the Risks of Superhuman AI

Many AI researchers roll their eyes when seeing this headline: “Stephen Hawking warns that rise of robots may be disastrous for mankind.” And as many have lost count of how many similar articles they’ve seen. Typically, these articles are accompanied by an evil-looking robot carrying a weapon, and they suggest we should worry about robots rising up and killing us because they’ve become conscious and/or evil. On a lighter note, such articles are actually rather impressive, because they succinctly summarize the scenario that AI researchers don’t worry about. That scenario combines as many as three separate misconceptions: concern about consciousness, evil, and robots. (Read more)

Source: Future of Life Institute

Future of Life Institute: The Future of Life Institute is a volunteer-run research and outreach organization in the Boston area that works to mitigate existential risks facing humanity, particularly existential risk from advanced AI. Its founders include MIT cosmologist Max Tegmark, Skype co-founder Jaan Tallinn, and its board of advisors includes cosmologist Stephen Hawking and entrepreneur Elon Musk.

Find new and coming technologies at our site: https://comingtechs.com . Please subscribe to our news form and add RSS to your favorite news reader.

[mc4wp_form id=”153″]

Post Comment

You must be logged in to post a comment.